| organizer | details |

|---|---|

| Ed Bueler | time & room: Thurs. 3:45-4:45 Chapman 206 106 206 |

| elbueler@alaska.edu | CRN: 38950 (= MATH F692P 002) |

| maximum credits: 1.0 |

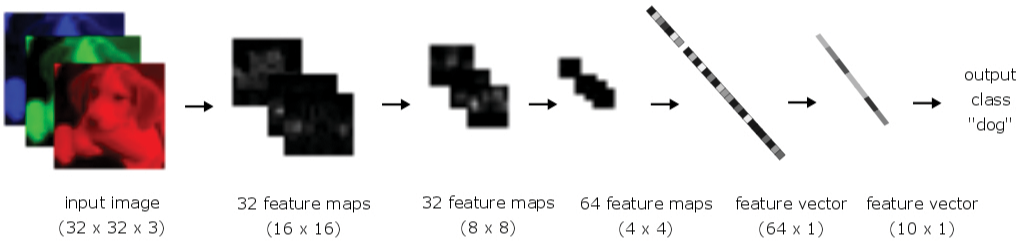

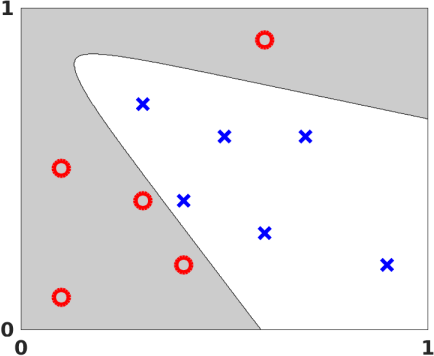

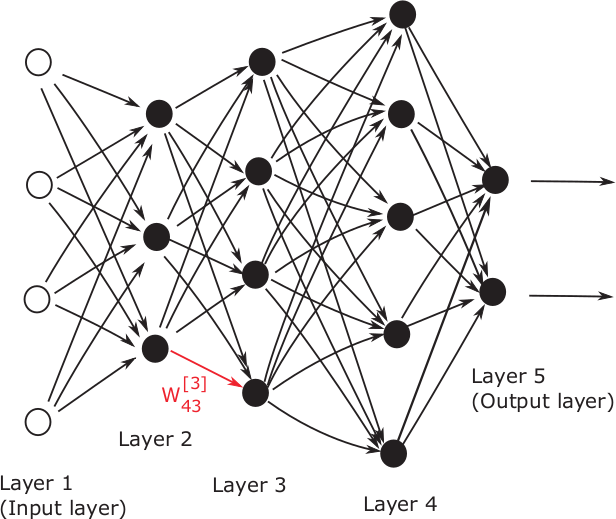

Multilayered artificial neural networks, and other styles of machine learning, are now pervasive in application fields. At the heart of this deep learning revolution are familiar concepts from mathematics, starting with calculus, linear algebra, and optimization.

This seminar is a participant-driven exploration of mathematical aspects of machine learning, including any related topics of interest, not limited to neural networks. We will start from the basic ideas that underlie neural networks, from an applied mathematics perspective, and branch out from there.

We hope to include any interested students and faculty from across UAF. The mathematics should at the introductory graduate or advanced undergraduate level, and any related, compelling application of mathematics is fair game!

Software libraries and statistical concepts are valuable topics for discussion and demonstration, and topics from statistics and computer science are welcome, but they might be covered elsewhere, e.g. in STAT 621 Nonparametric Statistics and CS 605 Artificial Intelligence graduate courses.

To get started, the organizer expects to give a talk based on the introductory review article by Higham & Higham (below): What is a deep neural network? How is one trained? What is the stochastic gradient method? Back-propagation? How is training related to least-squares problems?

Resources: One goal of the seminar will be to identify and collect some open resources, and there is a separate page already started for that purpose:

For example, the seminar starting point will be this excellent survey article:

Credit: Students who expect to earn 1.0 credits for this seminar should plan to give at least one (casual!) half-hour talk sometime during the semester. Anyone can receive 0.0 credits under any conditions!

Prerequisites: Graduate standing or permission of instructor.